From AI Proof of Concept to Production: Choosing Platforms That Scale Without the Pain

by James Gregory | 15/12/2025If your AI proof of concept works, but your product still isn’t scaling — you’re not alone.

Many organisations discover that building an AI demo is relatively easy. A model performs well in isolation, a pilot impresses stakeholders, and early results look promising. But the moment AI moves into production — with real users, real data volumes, security requirements, and operational constraints — complexity escalates fast.

In practice, most AI initiatives don’t stall because the model is bad. In our work with organisations at this stage, we repeatedly see teams underestimate what it takes to deploy, run, and maintain AI systems reliably at scale. This is where platform and architecture choices stop being a technical detail and become a business‑critical decision.

Azure AI Foundry: Reducing Operational Complexity at Scale

As AI initiatives move beyond early experimentation, many teams discover that model performance is no longer the main challenge. Instead, complexity emerges around managing multiple services, versions, environments, access controls, and dependencies across teams.

What often starts as a single proof of concept quickly turns into a fragmented setup that is difficult to operate, govern, and scale safely. This is a common point where AI projects slow down — not because the idea is wrong, but because the operational overhead grows faster than expected.

This is where platforms like Azure AI Foundry help reduce that complexity. Rather than forcing teams to stitch together multiple disconnected services, Foundry provides a unified way to manage and orchestrate AI capabilities such as language, vision, and document processing across the product lifecycle.

By abstracting infrastructure and service management behind managed APIs and tools, teams can spend less time keeping systems alive and more time focusing on user-facing functionality, iteration speed, and business outcomes.

In practice, this shift matters because operational simplicity directly affects delivery velocity, reliability, and long-term maintainability — especially once AI becomes a core part of a product rather than an experiment. This is a common inflection point where teams realise their initial architectural choices now shape everything that follows.

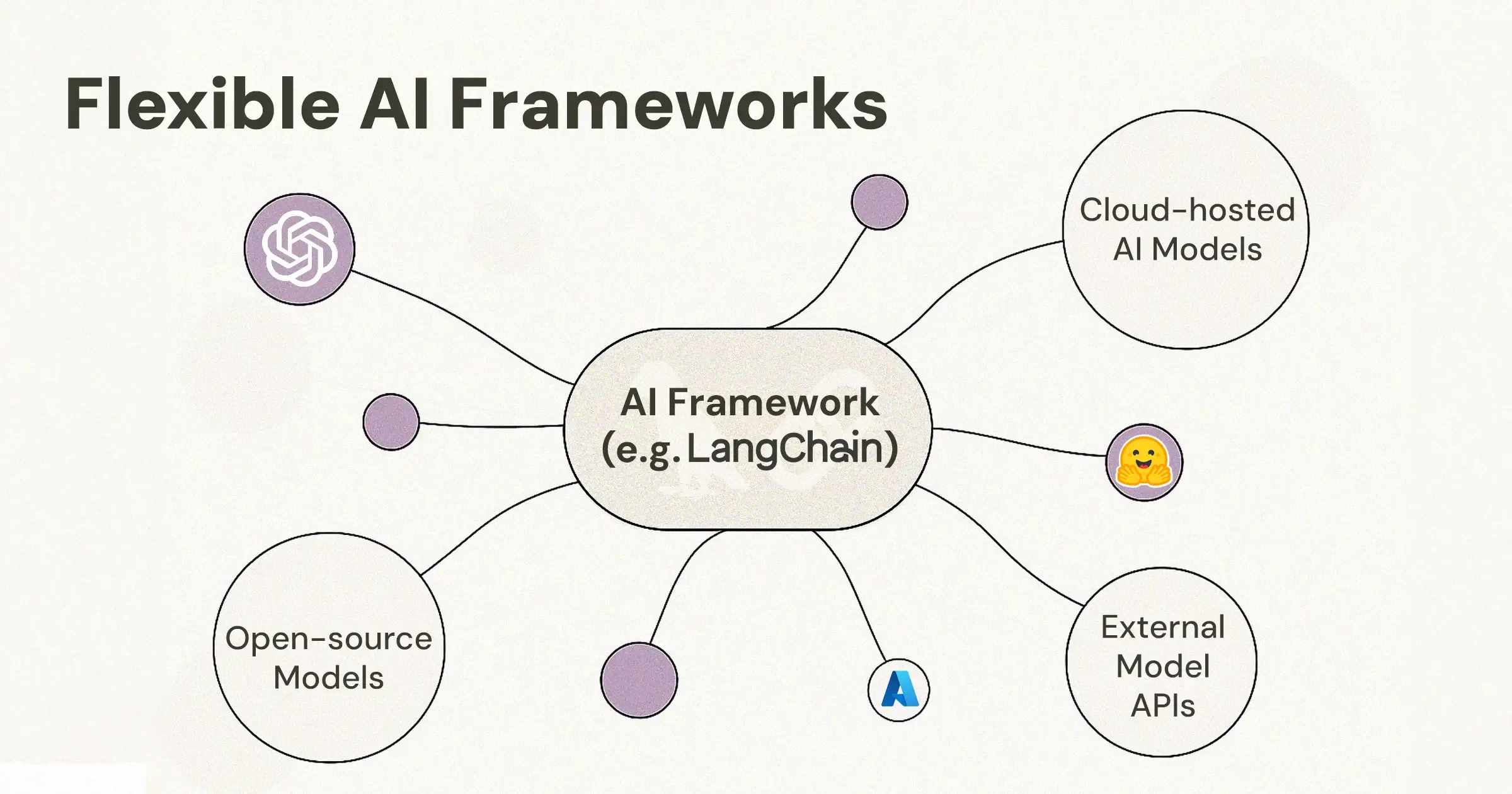

Flexibility & Model Interoperability: Avoiding Early Lock-In

Early AI experiments often optimise for speed: pick a model, wire it up, ship a demo. That approach works — until requirements change. New data sources appear, regulations tighten, costs rise, or performance expectations shift.

At that point, many teams realise they have unintentionally locked themselves into a specific model, provider, or architectural pattern. What once felt like a shortcut becomes a constraint.

At scale, flexibility is not a technical luxury — it is a risk management strategy. Organisations need the ability to combine different model types (language, embeddings, vision, document processing) and adjust their approach without rewriting large parts of their systems.

Azure’s modular ecosystem supports this by allowing teams to compose AI solutions from loosely coupled services rather than a single, monolithic stack. When combined with modern AI frameworks such as LangChain, this makes it possible to abstract model providers and reduce the impact of future change.

In practice, this means teams can experiment, evolve, or even switch direction — towards open-source models, hybrid deployments, or alternative providers — without turning platform decisions made early on into long-term blockers.

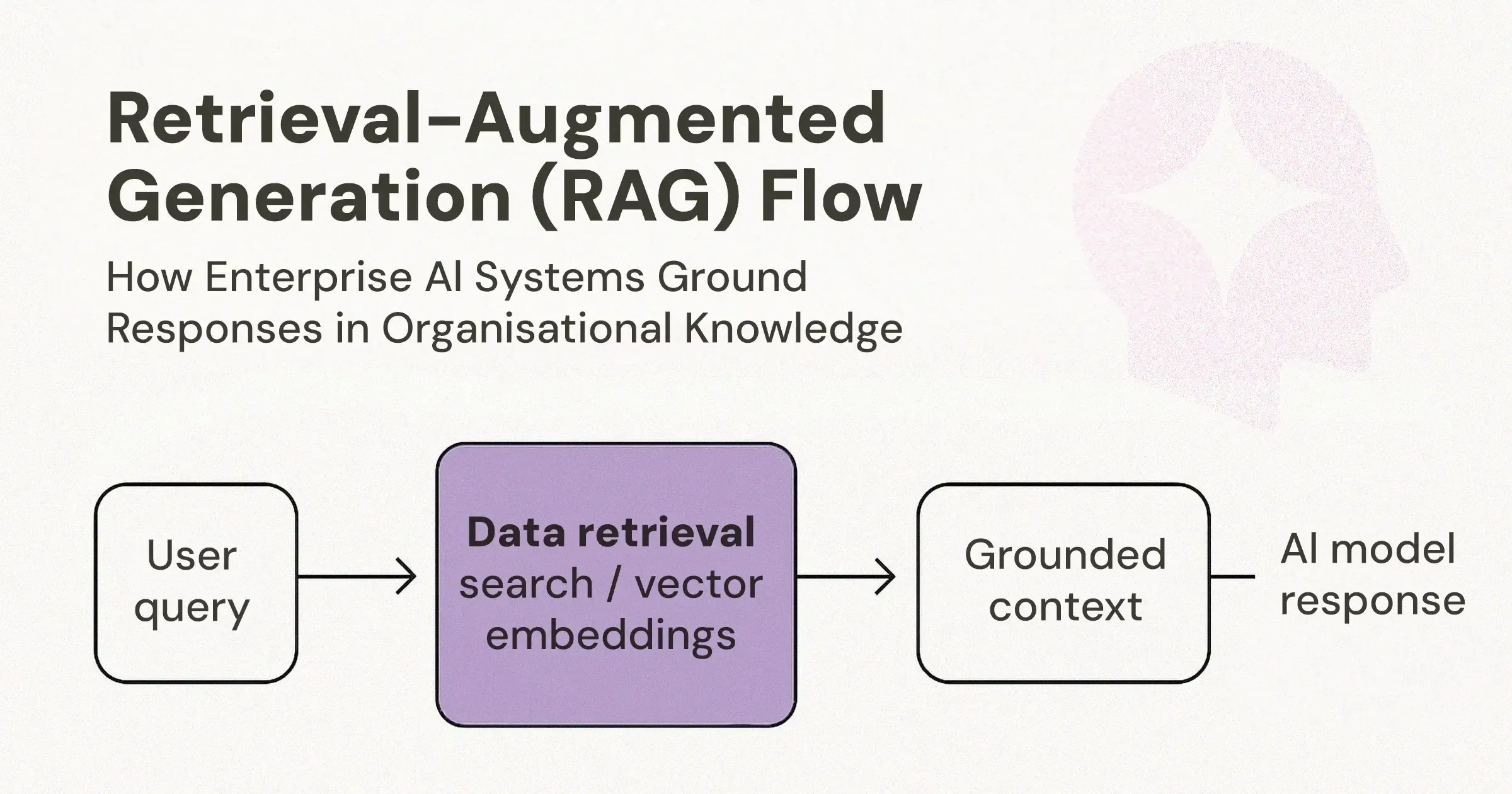

High-Quality Data Retrieval & Embeddings: Making AI Useful in the Real World

As soon as AI applications move beyond generic use cases, they need to work with organisational knowledge: documents, policies, records, historical data. This is the point where many promising AI initiatives quietly lose trust.

Teams quickly discover that without reliable data retrieval, AI systems either hallucinate, respond inconsistently, or fail to ground answers in information the business actually cares about. Simple keyword search is rarely enough, but building and operating custom semantic or vector-based retrieval infrastructure is complex and costly.

At scale, data retrieval becomes less about search features and more about confidence, accuracy, and operational reliability. If users cannot trust where answers come from, adoption stalls — regardless of how good the underlying model is.

Azure AI Search addresses this challenge by providing a fully managed retrieval layer that supports keyword, semantic, vector-based, and hybrid search patterns. For embedding-based retrieval, it acts as a scalable vector store — enabling architectures such as retrieval-augmented generation (RAG) without requiring teams to maintain bespoke infrastructure.

In practice, using a managed service for this layer allows teams to focus on designing AI experiences that are grounded in trusted data, while reducing the operational burden and risk associated with running custom search and vector databases at scale.

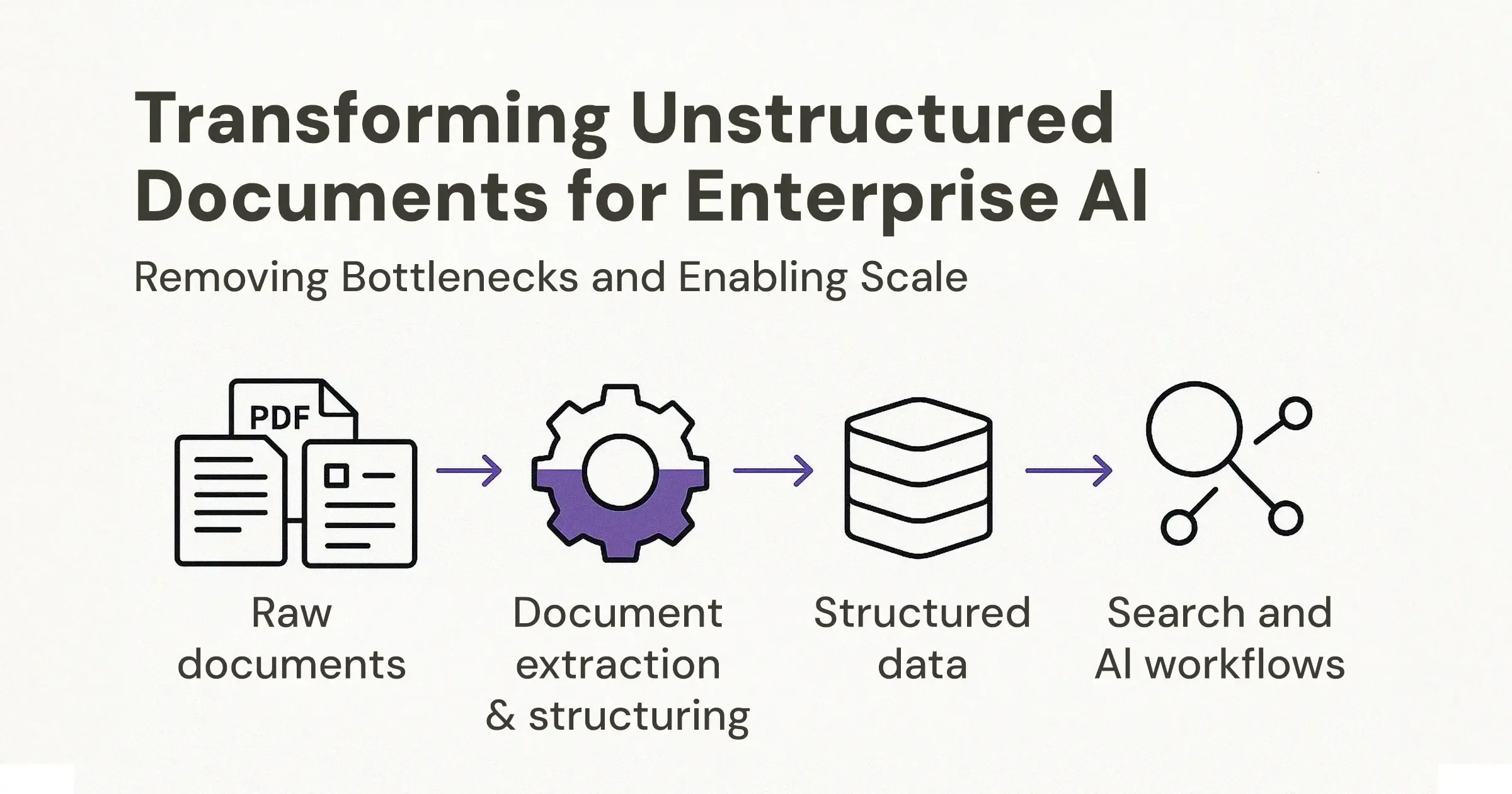

Intelligent Document Processing: Unlocking Unstructured Data at Scale

For many organisations, some of the most valuable information is locked inside documents: PDFs, scanned forms, spreadsheets, contracts, invoices, and images. This data is critical — but difficult to use.

At small scale, teams often rely on manual review or ad-hoc extraction. As volumes grow, these approaches quickly become a bottleneck. Processing slows down, errors creep in, and valuable information remains inaccessible to AI systems.

At scale, document handling stops being a data problem and becomes an operational one. If unstructured content cannot be reliably transformed into structured, machine-readable data, AI initiatives stall before they can deliver real value.

Azure Document Intelligence helps reduce this friction by providing pre-built and customisable models for extracting text, layout, tables, and key-value information from documents. Instead of building bespoke extraction pipelines, teams can automate document ingestion and feed structured outputs directly into downstream systems such as search indexes and AI workflows.

In practice, this allows organisations to move faster from raw documents to usable data — reducing manual effort, improving consistency, and removing one of the most common blockers in production-grade AI applications.

Why This Matters: From Experimentation to Sustainable AI

Once AI becomes part of a core product or business process, the questions change. Speed is no longer just about how quickly a prototype can be built, but about how reliably features can be delivered, updated, and operated over time.

At this stage, organisations are effectively making a choice: continue treating AI as an experiment, or invest in the foundations required to run it as a long-term capability.

At scale, AI initiatives succeed or fail based on operational reality: how much effort is required to keep systems running, how safely they can evolve, and how confidently organisations can expand usage across teams, data sets, and users. This is also where many organisations underestimate the cost of getting these decisions wrong — in delayed roadmaps, spiralling operational spend, and erosion of trust from users and stakeholders.

Platforms and architectural choices directly shape this outcome. Reducing infrastructure overhead, standardising common AI capabilities, and relying on managed services where possible lowers long-term cost, risk, and dependency on scarce specialist skills.

In practice, this means organisations can move faster where it matters — iterating on product value rather than firefighting operational issues — while maintaining the flexibility to adapt as requirements, regulations, and technologies inevitably change.

Conclusion: Choosing Platforms That Support Real AI Products

For organisations moving beyond experimentation, the challenge is no longer whether AI can work, but whether it can be operated reliably, scaled safely, and evolved over time.

As this article has explored, many AI initiatives stall not because of model quality, but because of hidden complexity across infrastructure, data retrieval, document processing, and day-to-day operations. Platform and architectural choices play a decisive role in determining whether AI becomes a sustainable capability or remains stuck in perpetual pilot mode.

Microsoft Azure — particularly through services such as Azure AI Foundry, Azure AI Search, and Azure Document Intelligence — helps reduce this complexity by providing managed building blocks that address some of the most common operational bottlenecks.

Used thoughtfully, these capabilities allow teams to focus less on keeping AI systems running and more on delivering real, trusted value to users and the business. The result is not faster demos, but more resilient, adaptable, and scalable AI products that can grow with organisational needs rather than constrain them.

Work With a Partner Who Focuses on What Comes After the Demo

Moving from proof of concept to a production-ready AI product is rarely just a tooling decision — it’s an architectural, operational, and organisational challenge. Naitive by NewOrbit works with teams at exactly this transition point, helping organisations design, build, and scale AI systems that are resilient, adaptable, and fit for long-term use.

If you’re looking to move beyond pilots and start treating AI as a real product capability — with the right foundations, guardrails, and delivery approach — partnering with Naitive can help you make those decisions with clarity and confidence.